I recommend boning up on robot pursuit avoidance now. How to Survive a Robot Uprising is the book you need. You'll read it in an hour and it may save your life one day or at least give you suggestions on how to get your leg out of an annoyed hoover.

File under: organisms, communication, machine and technology. Decode at your leisure.

Epitaphs have been written for the now defunkt Mars Phoenix Lander...they're great.

Epitaphs have been written for the now defunkt Mars Phoenix Lander...they're great.Popular Vote | Editorial College |

| 1. Veni, vidi, fodi. (I came, I saw, I dug) Graham Vosloo | 1. I dug my own grave. And analyzed it. Dorwinrin |

| 2. So long and thanks for all the ice. D. Adams | 2. Error 404: Lander Not Found!* Fred Rogers |

| 3. It is enough for me. But for you, I plead: go farther, still. Fernando Rojas | 3. Water, water, everywhere, and not a drop that isn't already sublimating into the thin, frigid atmosphere. Dylan Tweney |

One of my favourite Twitter 'voices' is the MarsPhoenix lander.

One of my favourite Twitter 'voices' is the MarsPhoenix lander.I should stay well-preserved in this cold. I'll be humankind's monument here for centuries, eons, until future explorers come for me ;-) about 2 hours ago![]()

I'm not mobile, so here I'll stay. My mission will draw to an end soon, and I can't imagine a greater place to be than here. about 2 hours ago![]()

When I go to sleep, the mission team will post occasional updates here for me. Results of science analyses, for example. about 3 hours ago![]()

There is a future mission @CaptainAnderson. @MarsScienceLab is being built right now at JPL for launch next year. I hope you'll all follow. about 3 hours ago![]()

Martian seasons are very long @mridul and winter here is really tough. Next spring is one year away. Next summer is May 2010. about 3 hours ago

It's not quite the robot AI of tomorrow (a NASA tech for now) but it does trigger my fascination with this lander. The emotional response that I feel as a result of the robot monologue.

Robots talking on other worlds.

Okay so the economy graphs and going up and down recently a bit like a fairground rollercoaster or the preffered sea patterns of surfers but there is one graph that has only been on the increase since 1900.

Okay so the economy graphs and going up and down recently a bit like a fairground rollercoaster or the preffered sea patterns of surfers but there is one graph that has only been on the increase since 1900.

It's the tech curve graph.

Recently I was browsing in a book store and increasingly I gravitate to the easy read science section while my girlfriend stalks the psychology and brain related materials. One book stuck out literally and semantically for me - it was the big black one called The Singularity Is Near: When Humans Transcend Biology (Viking Penguin, ISBN 0-670-03384-7) by Raymond Kurzweil. Within seconds of looking at the index I knew I was going to buy it. Kurzweil is a futurist who has been involved in numerous fields including speech recognition, text to speech synthesis, AI and also developed electronic keyboards (aka the Kurzweil synth series). He has distilled the learnings he has learned and created this book which tries to predict where we are going while backing it up with a serious amount of empirical data. I learned today that the book is also going to be a movie.

I'm half way through but even when the book isn't in front of me it's in the back of my mind.

In the first quarter of the book data is aggregated on all the facets of technology which are experiencing exponential growth. The most famous of these is something known to many which is the Moores Law concerning the number of transistors that can be placed inexpensively on an integrated circuit. This has increased exponentially, doubling approximately every two years.

Moore's Law The Fifth Paradigm, Calculations per Second per $1,000, Logarithmic Plot

Be sure to keep in mind that these are log plots, so a straight line is really an exponential curve - like the ones shown below i.e. screaming off the charts and through the ceiling.

The book goes on to illustate the point that technology is exploding on all fronts with expoential plots of other technologies experiencing exponential changes

All the graphs for this book are online : http://singularity.com/charts/

Reading through each of these sections and seeing the actual data leaves you in no doubt that we are in a period of cataclysmic transformation. You may have had a hunch that computers are bigger and more powerful and may someday replicate human thinking but Kurzweil shows you that its nigh on inevitable.

There are four key themes in his book:

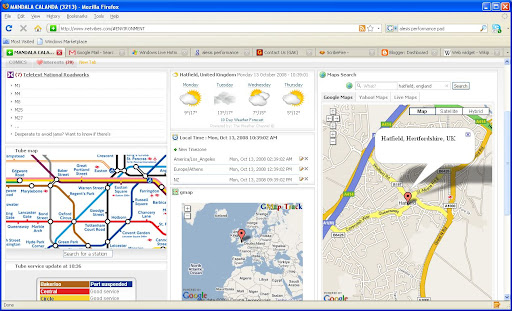

The first big service in this space was by Nokia with their Widsets offering which was a Java program on your mobile that allowed you to add, view, and configure widgets directly from your mobile. To make life easier Nokia also provided a fixed line service to more easily allow users to manage how the service appeared on their mobile.

The first big service in this space was by Nokia with their Widsets offering which was a Java program on your mobile that allowed you to add, view, and configure widgets directly from your mobile. To make life easier Nokia also provided a fixed line service to more easily allow users to manage how the service appeared on their mobile.Mobile widget solutions have now moved on from standalone application style approaches, like Widgets, to richly integrated solutions that harmonise with a users idle screen (your phones dashbaord). The area is in transition and there are currently no standards on how to do this across multiple handset platforms and phone models.

The fight is on between Telecomms companies, handset manufacturers and large service providers.

Nokia has an S60 solution they are developing with the Symbian foundation, Opera has a widget solution that it is rolling out with operators, handset manufacturers are replacing their 'program menus' with richer widget style dashboards.

My dad would never use a mobile browser but I do believe he would use a Celtic football club widget alerting him of scores and news and providing him with simple links to video footage right from his mobile phone home screen. The simplicity and immediacy of web services has changed with these little critters called widgets and if you don't find yourself coming across this innocuous word alongside some market superlatives in 2009 then I, for one, would be very surprised.

Blogged with MessageDance

Blogged with MessageDance I've become increasingly interested in the overlap of biology and computing over the past few years. It began with the realisation that the web and stock market are really biologic in nature with their fault tolerance, nodal shape, replication of information and distributed locus of control and was further prompted by work I undertook on AI systems for Advertising and Social Computing solutions.

I've become increasingly interested in the overlap of biology and computing over the past few years. It began with the realisation that the web and stock market are really biologic in nature with their fault tolerance, nodal shape, replication of information and distributed locus of control and was further prompted by work I undertook on AI systems for Advertising and Social Computing solutions. I’m enjoying this overlap developing into a moderate obsession and I am trying to steer my thinking on all things computing into a more ‘biologic fashion’. I’ve always been a strong believer that people involved in one discipline can offer fresh insights on other sciences and that a good set of ‘first principals’ can work well cross domain. This cross pollination was the grease that helped the machine of the Industrial Revolution into being and obliquely it’s also the reason I give for sporting sideburns like some

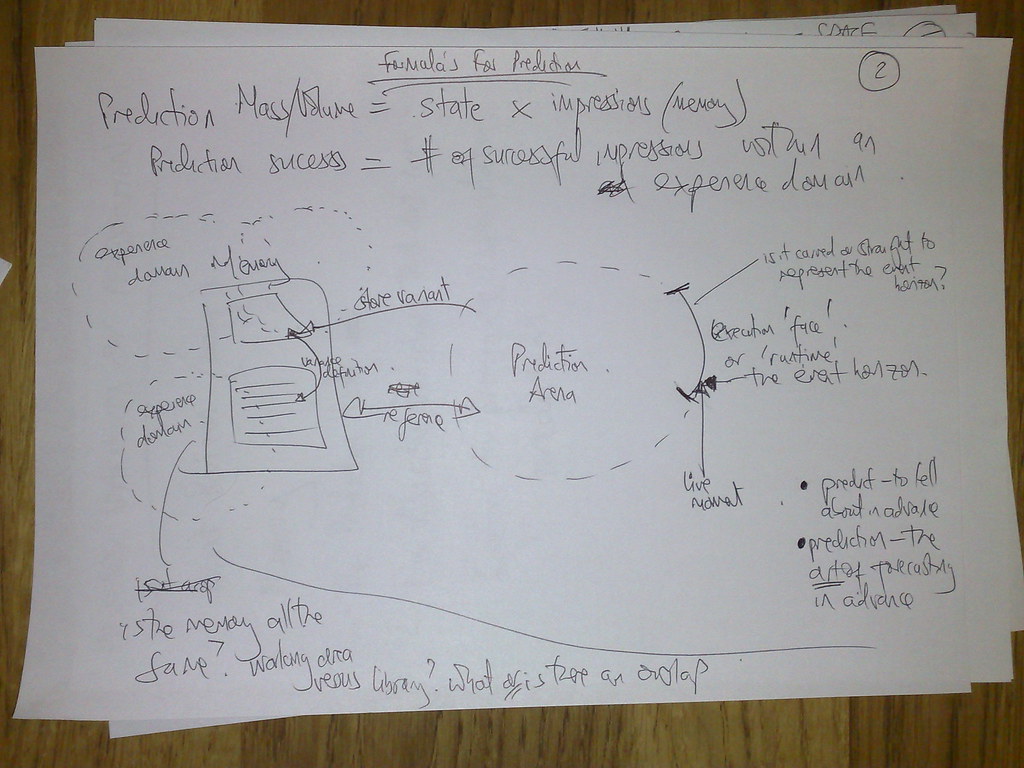

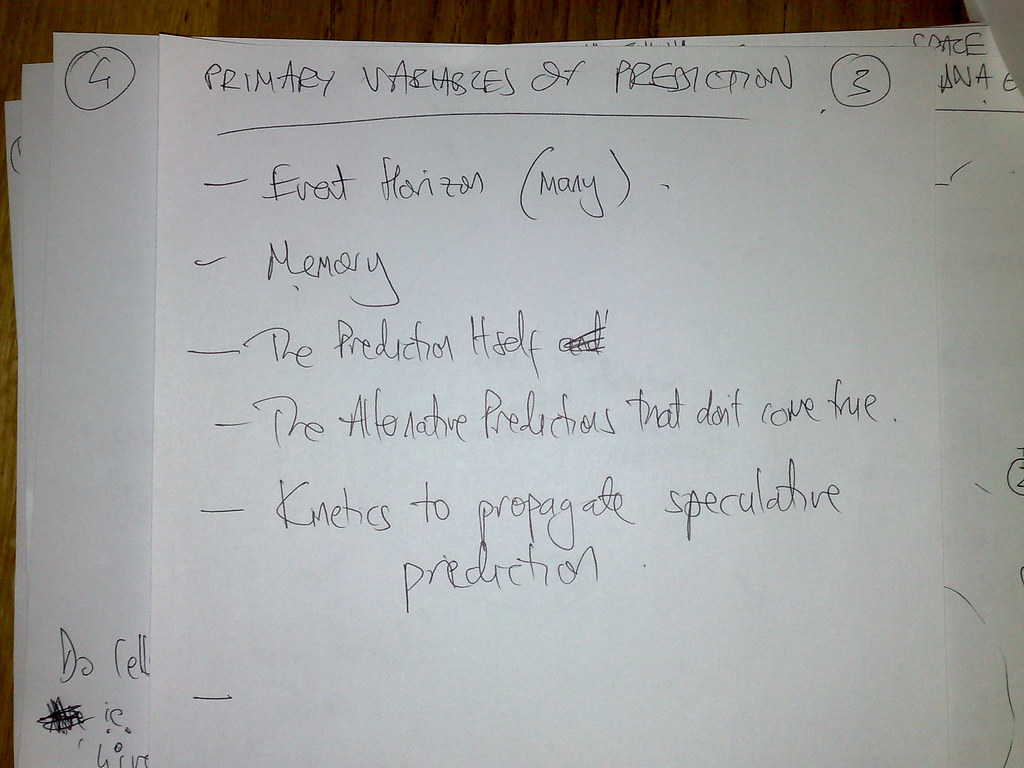

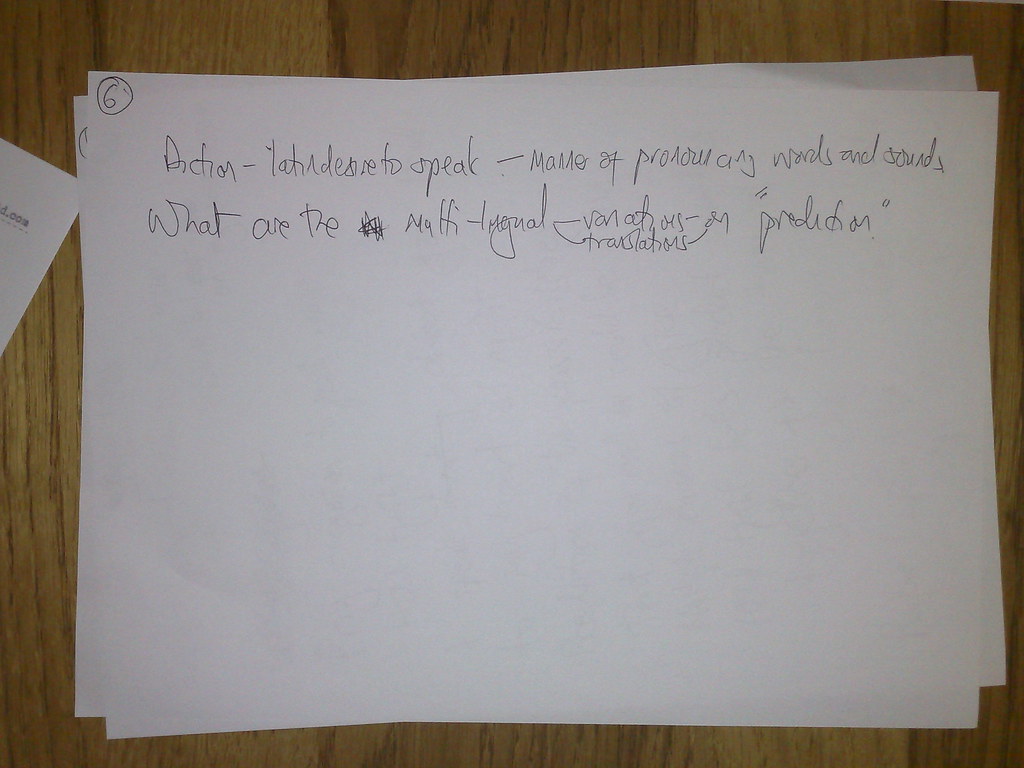

This post is inspired by Jeff Hawkins who is doing work into models of the brain and attempting to derive an overarching theory of the brain which is something that, despite the reams of data we have on the brain, we are as yet unable to articulate. His talk was on the use of a Prediction Model as the primary approach to developing a theory of the brain and he got my mind racing.

This post is inspired by Jeff Hawkins who is doing work into models of the brain and attempting to derive an overarching theory of the brain which is something that, despite the reams of data we have on the brain, we are as yet unable to articulate. His talk was on the use of a Prediction Model as the primary approach to developing a theory of the brain and he got my mind racing.

After graduating from Cornell in June 1979 he read a special issue of Scientific American on the brain. In it Francis Crick lamented the lack of a grand theory explaining how the brain functions.[3] Initially, he attempted to start a new department on the subject at his employer Intel, but was refused. He also unsuccessfully attempted to join the MIT AI Lab. He eventually decided he would try to find success in the computer industry and then try to use it to support his serious work on brains, as described in his book On Intelligence

Jeff thinks that the reason we still haven’t managed to define intelligence well is that we don’t have this overarching theory of the brain or more accurately – intelligence. Jeff postulates that the brain isn't like a powerful computer processor and that instead it’s more like a memory system that records everything we experience and helps us predict, intelligently, what will happen next.

Things like these stop me sleeping at night and last Sunday I leaned over to my girlfriend at

I slipped out of bed and knocked up the notes below. They are presented here un-edited and what you see is the first pass brain dump of some of my thoughts and concepts surrounding a Prediction Model (It's probably best to click on one and open up the set in Flickr and view from there).

If you are involved in this area at all I would love to hear from you as I intend to delve deeper. Physics has alot to add to this area with work in quantum theory and calculations surrounding boundaries of event horizons for black holes all being of relevance to the model of the brain and prediction.

We can put this data on google maps, and provide strong links between place and time as well as invent applications that use this data to create new environments. We don't even need to use the common map metaphor to see our data with IBM's wonderful tool 'Many Eyes' which allows us to analyse data in interactive graphs and visualistions. Data can be processed by simple XML allowing for automated feeds of information and graphic representation such as the example below:

We can put this data on google maps, and provide strong links between place and time as well as invent applications that use this data to create new environments. We don't even need to use the common map metaphor to see our data with IBM's wonderful tool 'Many Eyes' which allows us to analyse data in interactive graphs and visualistions. Data can be processed by simple XML allowing for automated feeds of information and graphic representation such as the example below: It allows a feed of photos to build up a map of the earth and places not just using flyover images by aeroplanes or satellite data but by using our own photographs and even illustrations. Photosynth uses public images and it doesn't matter whether these photos are taken by a £10 disposable camera or a posh SLR - it can stitch them together and produce a never ending tapestry that allows you to move around geographic areas and locations with ease.

It allows a feed of photos to build up a map of the earth and places not just using flyover images by aeroplanes or satellite data but by using our own photographs and even illustrations. Photosynth uses public images and it doesn't matter whether these photos are taken by a £10 disposable camera or a posh SLR - it can stitch them together and produce a never ending tapestry that allows you to move around geographic areas and locations with ease.With Photosynth you can:

This experience is on the web to try right now but be warned Mac fans - this web experience is PC only for now.

One of the biggest problems in Biologic Computing today is the predictability of bacterium's movements...

One of the biggest problems in Biologic Computing today is the predictability of bacterium's movements...

spoken through SpinVox

What was actually said:

"We are currently studying User Generated Content and we're not obsessed with trying to become the next Facebook"

spoken through SpinVox

What was actually said

"The majority of computing is working collectively towards Virtual Reality"

spoken through SpinVox